Is Google filtering your business in the local search results?

Seeing a shift in Google’s local results? It’s not just you. Columnist Joy Hawkins documents what appears to be a recent refresh to Google’s local ranking filter.

It seems very apparent that Google has a filter for local that weeds through all the listings that should appear in the 3-pack on Google and in the Local Finder (when you click “More Places”), then filters some based on their spam score.

Bill Slawski wrote about this in July and referenced the patent that was granted June 21, 2016, about it. To quote the patent:

A spam score is assigned to a business listing when the listing is received at a search entity. A noise function is added to the spam score such that the spam score is varied. In the event that the spam score is greater than a first threshold, the listing is identified as fraudulent and the listing is not included in (or is removed from) the group of searchable business listings.

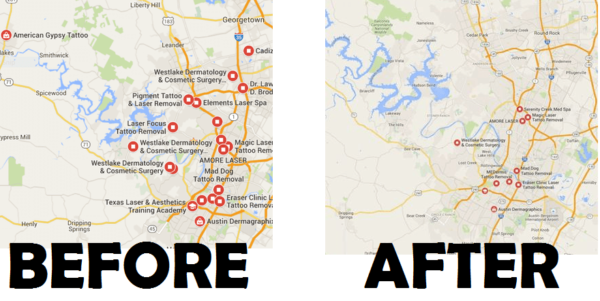

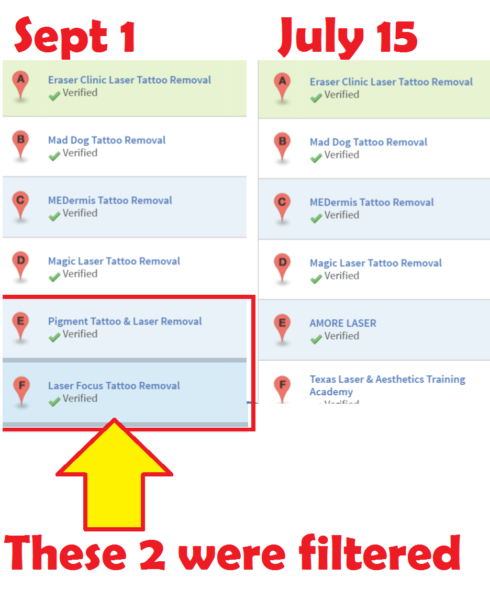

I started seeing more of this filter back in July of this year when people started reporting that the local search results were shrinking and eliminating a lot of businesses that were previously visible. The screenshot below helps show the difference that the filter makes. Before July 2016, there were several businesses that were included in the list that were eliminated in July.

Then on September 1, the results completely changed again, and many of the previously filtered listings are now included.

Some observations that I’ve made about the filter so far are:

- The filter doesn’t run in real time. We saw an update some time at the beginning of July that shrunk a lot of the local results. Since then, the results didn’t update whatsoever — until September 1. No new businesses were included and none from the existing list were eliminated for a period of two months. This leads me to believe that Google reruns this filter in cycles (which could be weeks or months).

- The filter is impacting some industries way more than others. In certain industries, it almost appears like the filter doesn’t exist (or the criteria aren’t very strong), whereas in other industries there are more listings getting filtered than making the cut.

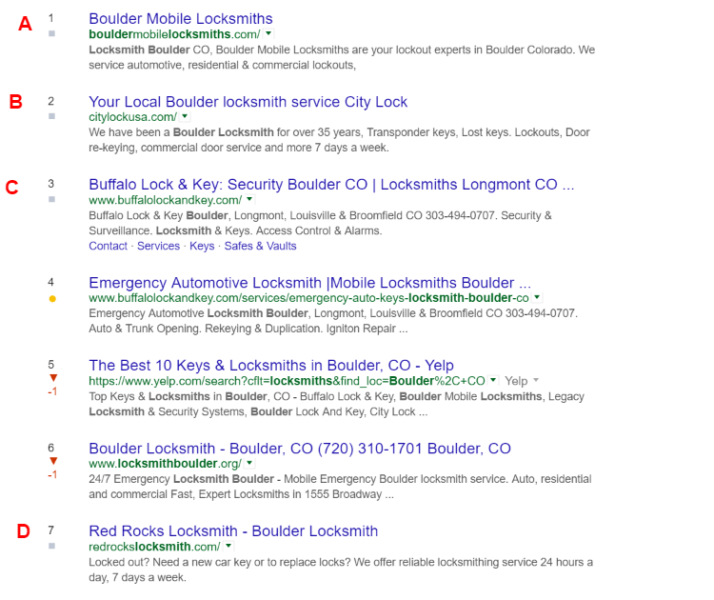

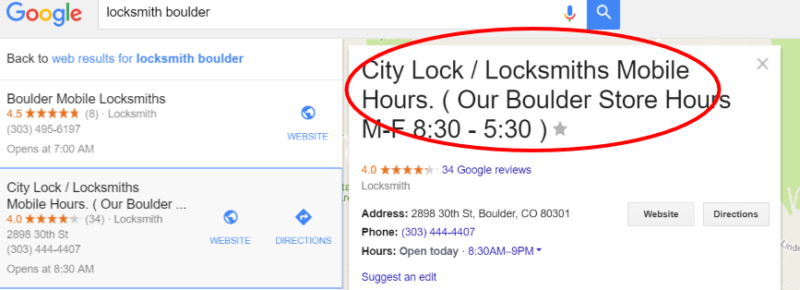

To look at one of the more extreme cases, I wanted to highlight some observations about the below SERP for “locksmith boulder” which, as of July, only showed four businesses in the Local Finder.

I’m seeing the filter hitting the locksmith industry harder than any other due to the amount of fake listings that exist. Scott Barnett co-founded an online business directory called Bizyhood and, when he analyzed the locksmith listings in his directory, he found around 80% of them were fake.

What factors does Google filter based on?

Age of listing

I believe the age of the Google Maps listing is currently one of the strongest ranking factors for the 3-pack. When comparing the listings that made the cut vs. the businesses that didn’t, there was definitely a pattern here. I’ve historically found that old listings carry a ton of ranking power; in fact, they are often the target for spammers who like to hijack listings precisely because they are so strong.

Here are a few observations from the “locksmith boulder” set above:

- City Lock’s listing has reviews on it that are from six years ago.

- Buffalo Security’s listing has been around since 2011.

- One of the listings that is filtered only has reviews dating back six months, so it looks like a fairly new listing.

- Similarly, this listing (which also didn’t make it into the list of four) has only been around since February 2016.

Organic ranking

I don’t believe it’s any coincidence that all four of the locksmith listings ranking in the Boulder 3-pack have organic rankings in the top 10 as well.

Spammers on Google Maps have historically created fake listings without a strong organic presence, so it would make sense that Google is using this as an indicator. When I looked at one of the businesses that didn’t make the cut, they ranked #91 for “locksmith boulder” organically. One of the others wasn’t even in the top 100.

Duplicate listings

In Slawski’s article, he notes that the patent mentions that businesses employ methods to include multiple listings for the same business, so this is something Google looks at when evaluating the spam filter. For one of the locksmiths that didn’t make the initial cut in July, the address they are using is a plaza, so there are dozens of other listings at the same address.

Coincidentally, the address they use also has a UPS Store in the same plaza. Additionally, the suite # formatting on the other businesses in this plaza all follow the same pattern (E104, D102, A111 etc) yet Boulder Lock and Key (pin “F” in the image above) just lists its suite as “#3.” That could be enough to make Google suspicious of the listing and filter it initially.

When the results got refreshed on September 1, this particular business was now included in the list. This might make sense since the listing has now been around longer than when the July update occurred.

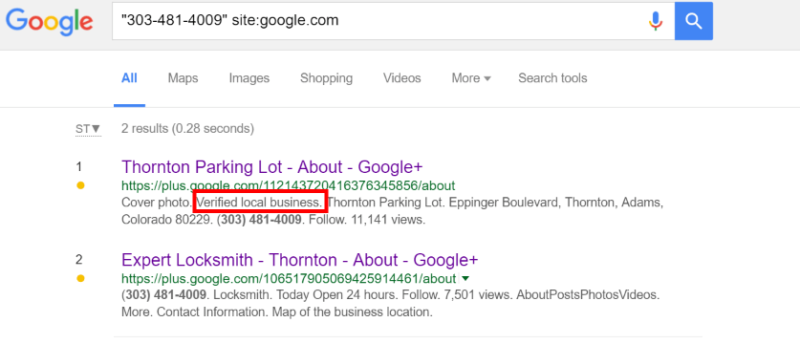

Keep in mind that when we look for duplicates, we might only see a fraction of what Google does. They can also see removed listings that were created and then removed for various reasons. In another case in a nearby town, one of the filtered businesses had previously had a listing verified for a parking lot using the same phone number (another typical pattern for spammers).

Spam filter vs. suspensions

It’s important to note that this filter is completely different than listing suspensions. Listings get suspended for different reasons and in industries with a high percentage of spam, businesses can have their listing suspended for making a small change in the dashboard that triggered something.

In the last few months, I’ve seen one locksmith get suspended after hiding her address (to keep her listing within the guidelines) and another that got suspended right after he changed his description (before Google removed it). Earlier this year, I wrote about what gets listings suspended and what you need to know about them.

As further evidence that suspensions and this filter run independently, it’s important to note that one of these four listings in Boulder was suspended three times in 2015 according to the history on the listing. Listings sometimes get removed by active mappers on Google but every one of these suspensions was Google that removed the listing. Regardless of being suspended that many times, it still ranked high and made the cut whereas several other listings did not.

Suspensions also often use manual processes to reinstate listings that were suspended wrongly. Things like business licenses and photos will help a business get reinstated (by proving they are a real business) whereas I don’t see either of those two things being used for this filter. One of the four listings didn’t even have a business license in Colorado under the name that they used.

Remember that the filter keeps these listings from appearing in specific search results for their industry whereas suspensions either remove the listing entirely from Google or Google Maps (along with all reviews/photos attached) or un-verifies it, depending on which type of suspension it was.

Factors that don’t appear to contribute

Business name

The business name is one of the most misused fields on Google My Business in the sense that Google has very strict guidelines around how you should list yourself, yet businesses constantly add descriptors and keywords and get away with it because Google doesn’t appear to have a good method to automatically enforce these guidelines.

Business names get altered/adjusted when someone reports them or edits them via Google Maps or MapMaker. I have seen little evidence that Google does this on their own whatsoever.

For this set of listings, the #2 business has very clear violations in their business title yet is not getting filtered. Everything that comes after “City Lock” would be considered descriptors and are “not allowed.”

In summary, Google still has a long way to go to get rid of all the spam that exists but hopefully in the meantime users will continue to report fake listings to help level the playing field for those who are actually trying to abide by the rules.

It’s also possible that the update we saw on September 1 was actually an algo tweak, not just a refresh. I can look at the patterns and analyze things, but ultimately the only ones that really know how the algorithm works are Google staffers themselves!

If you have examples or questions about some of these recent changes, I’d love to hear about them. Feel free to tweet me examples or leave details on the thread on the Local Search Forum.